Mathematics and the Real World: The Remarkable Role of Evolution in the Making of Mathematics (2014)

CHAPTER VII. COMPUTATIONS AND COMPUTERS

56. WHAT NEXT?

The ideas presented in the last two sections are specific to the era of very fast computers. Yet even the fastest computers today do not fulfill all our desires for rapid computation. Moreover, as computers become faster, our desires and aspirations for calculations become more intense, and we will never reach a situation in which the computational power available satisfies us. In addition, as we have seen, there are problems that theory indicates cannot be solved efficiently. On the other hand, some daily computational tasks are solved by the human brain satisfactorily, tasks that the fastest computers using the best programs known are incapable of handling. In like fashion, processes in nature can be seen that are essentially computational, whose implementation is incomparably superior to that of the fastest computers. Therefore, understanding nature's processes is likely to provide a clue as to how to arrive at more efficient computational processes. Thus nature does not only serve as the main source of objectives, it also provides inspiration for developments on which mathematicians and computer scientists are engaged in their efforts to advance computational ability. In this section we will describe several directions in which mathematics and technology are developing, with the intention of promoting computational possibilities and the possible uses of computers.

The first objective is related to the human brain. The human brain is capable of performing tasks that are out of reach to the fastest computers. For example, facial recognition. Sometimes a quick glance at a large, tightly crowded group of people is sufficient for you to recognize a school friend whom you have not seen for some years. There is no computer program that can come anywhere remotely close to being able to do that. That gap is used for instance in entry to certain websites on the Internet, when you are asked to identify and copy a random alphanumeric series written in a deformed way. The human brain can identify the numbers or letters, while computer programs cannot. A second example is understanding a language and translating from one language to another. Even if you hear just part of a badly structured sentence with an unusual accent or pronunciation, you may well be able to understand what is being said. A computer will understand and react correctly only if the sentence falls within the framework that it has been programmed to recognize. There are programs for translating from one language to another, but their quality is acceptable only if the material is of a technical nature and is in a predetermined structure. Checking the meaning of what is written, particularly reading between the lines and translating accordingly, straightforward tasks for a human translator, are beyond the reach of any computer today. To go further, people sometimes mean the opposite of what they write or say. For instance, someone might want to say that every head injury, however serious, should be treated, and might write, “There is no head injury too serious for you to give up hope” (compare with the example in section 50). Someone else will correctly realize the writer's meaning and will comment and act accordingly, but not a computer. A computer would understand from the written text that one must give up on all head injuries—the correct grammatical meaning. No current translation program can correctly analyze the intention of what is written, which humans do relatively simply. The research area that develops computer programs that can imitate human abilities is called artificial intelligence.

We have spoken of Alan Turing as the man who laid the foundations of the mathematics of computation. He also recognized the gulf between the computational abilities of the human brain and those of the computer, and in an article in 1950 he presented a challenge to the science of artificial intelligence. It is known as Turing's test and is the following. When will we be able to say that a machine has human intelligence? A person can talk to another person or to a machine even when he cannot see his collocutor. When the speaker cannot decide whether his collocutor is a person or a machine, the machine has reached the threshold of human intelligence. The test highlights the great difference between human thinking and the computational procedure. Turing's test does not relate to the extent of the knowledge or the speed of reaction. In these, every computer is vastly superior to humans. The test examines the extent to which the computer can imitate human thinking. We have stressed more than once that evolution has taught us to think intuitively, which is incompatible with mathematical logic. Our judgment is based on our own life experience and also on experience gained through millions of years of development, experience coded into our genes and transmitted by heredity.

Can the computer emulate that experience? The answer is unclear. Artificial intelligence has many achievements to its credit and has made impressive progress, but only time will tell whether programs based on logic can reach the achievements of thinking produced by evolution.

One of the ways of advancing a machine with artificial intelligence is to copy the way the brain itself carries out its thinking tasks. We do not know or understand all the elements of thought in the human brain, but we do have an idea of the brain's anatomy. Specifically, the brain consists of a huge number of neurons, forming a network of neurons, with each neuron connected to several of its neighbors and capable of transmitting an electrical signal to each one of them, according to the condition of the neuron and the environmental conditions. The thought process after receiving any input occurs by means of transfers of these electrical signals, whose number is inconceivable, and they take place simultaneously between different clusters of neurons. At the end of the thought process, the brain is in a new situation, where the output might be an instruction to one of the organs of the body to react in the appropriate way, or preserving this fact (the input) or another in the memory. One direction in which efforts are being made to construct a machine to achieve the same capabilities as the brain is focusing on attempts to copy this structure, called neural networks. That is an attempt to build a mathematical model that takes advantage of the structure created by nature to perform efficient computations. At this stage the target is still far away, partly because the implementation of the mathematical model uses those logical computers that lack human intuition. If indeed intuition and the exercise of human discretion are just the outcome of a huge number of neurons in the brain, computers and computer programs that imitate neuron networks have a chance of passing Turing's test. It may well be, however, that within human thought there is another structure as yet unknown to us.

There is another impressively effective computational process in nature, demonstrated by evolution. The debate between the proponents and opponents of the theory of evolution, with the latter favoring the concept of intelligent design, is not purely science based. The opponents of the theory of evolution do however put forward a scientific argument, that such an ordered and successful structure cannot be the result of a random, unprogrammed process. It should be noted that although randomness does play a role in evolution, the process itself is defined deterministically. Among the random mutations, the one to survive will be the one best adapted to its given environment. The mutations occur in the process of gene reproduction. It is difficult to grasp how efficient the process is. It is hard to imagine the development via mutation and selection from one protein molecule to the vast array of animal life on Earth. That very success serves the opponents of the theory of evolution, who claim that the outcome does not stand to reason. Yet the evidence that that is indeed the way all living things developed keeps accumulating. Such evidence includes computer simulations of the process of evolution, which show that the process is feasible. Over and above computers’ ability to simulate evolution itself, they are capable of imitating the principles of the process of evolution, that is, they can construct algorithms based on mutation and selection to achieve other computational tasks efficiently.

Such algorithms are called genetic algorithms. It is difficult to identify the first stages of the system, as so many scientists contributed to its development in different directions. One well-ordered formulation that incorporated basic assumptions and rules governing its progress, which made the subject very popular, was proposed by John Holland of the University of Michigan in a book published in 1975. We will illustrate the principles of the system by means of an example.

Assume that we have to build a program for takeoffs and landings at a very busy airport. The aim is to produce a timetable that will meet the safety requirements, constraints regarding the time period between landing and takeoff, airlines’ requirements of weekly landings and takeoffs, and other such requirements, while minimizing the time a plane is unnecessarily on the ground at the airport. The problem can be stated in the form of complex mathematical optimization equations, but there is no way of solving such problems completely even with the most advanced computers. The genetic algorithm method offers a different approach. First, construct a timetable, which may be far from optimal, that satisfies the various constraints and requirements. Next, try various mutations, small changes to the timetable, while still fulfilling all the requirements. For each mutation, measure whether the optimization index has improved, and if so, by how much. A computer can easily perform hundreds of such changes and checks. Then select the ten results with the best optimization outcomes. For each of these, repeat the process, that is, introduce further changes, and again select the best ten of these second-generation results. Keep repeating the process until there is no further significant improvement in the results. The final result may depend on the initial timetable proposed, but a computer can go over the process with a different starting timetable. Several such attempts will produce a satisfactory timetable for the airport.

Widespread use is made of the above method in different areas of programming and engineering. This development may be classified as a stage in mathematical developments prompted by the speed of computers. Recently uses of the method in new areas have been published.

The purpose of one of these is to identify the equations, or laws of nature if you will, underlying experimental data. This is not really new in mathematics. Gauss used the measurements that were available regarding the asteroid Ceres and, using the least-squares method on the parameters of the equations of the motion of the asteroid, predicted when Ceres would reappear from behind the Sun. Gauss's starting point was the information that the equations of Ceres's motion were Newton's differential equations. Economics provides another example. The current practice in applied economics research is to assume a general form of dependence between two macroeconomic variables, say input and output, and to use mathematical calculations to find the parameters that provide the best correlation. In such and similar cases, the first proposal, whether Newton's equations or the relation between input and output, is based on human understanding.

The computer scientist Hod Lipson of Cornell University is trying to go one step further. When it is given a collection of data, say data on the motion of a particular body, the computer starts with a general equation and measures to what extent it describes the body's motion. Then the computer tries “mutations,” that is, small changes in the equation itself, and from the thousands of possibilities, it selects say the ten that best describe the body's movement, and so on, advancing by means of mutations. This genetic algorithm ends with the equation that best describes the body's motion. Of course the computer is restricted by the collection of mutations that the program allows, but the incredible speed of the calculations enables efficient examination of a wide range of equations. We could by way of caricature say that if Newton's laws of motion were unknown to us today, it would be enough to know that the physical law is a differential equation connecting location, speed (the first derivative), and acceleration (the second derivative), and the genetic algorithm would reveal the equations from the empirical data. This does not mean that Newton was superfluous; he introduced the differential equations to formulate his laws, and such a step is beyond the capabilities of a computer (at least today's computers). However, with regard to many engineering uses, it may be easier to use the algorithms that Lipson and his colleagues are developing than to try to use analytical logic to find the exact equations that describe motion.

Another even bolder development is the attempt to use a genetic algorithm to develop computer programs. Moshe Sipper of Ben-Gurion University of the Negev is building such algorithms. Assume that we wish to write a computer program that will perform a particular task efficiently. Let us start with a simple program that more or less carries out the task. We let the computer examine the programs that it obtains by introducing small changes to our initial program. From these mutative programs, we select say ten that best perform the set task. We perform another round of mutations on these and continue thus until the program performs the task we set. Of course that is the idea. Its implementation is much harder. It is difficult to estimate today how far these developments, based on a method in which evolution works, will reach and with what measure of success.

We will briefly mention two other directions of development, which in effect are trying to replace electronic computers with computers of a different type. The first is molecular computation. The immune system can be seen as an extremely efficient computation process. The input is the invasion of bacteria into the blood stream. The computer, in this case the immune system, identifies the bacteria and sets in motion the creation of white blood cells of the type needed to kill the bacteria. In most cases the output is the dead bacteria. The computation is performed by the meetings in the blood between the huge number of bacteria and the huge number of blood cells. Biomolecular scientists can draw conclusions regarding the process from the outcome, which is a certain protein. The biomolecular knowledge can be used to calculate mathematical results. From the result of mixing several types of molecules we can learn about the components that went into the mixture. These components can represent mathematical elements, and the mixing element can represent mathematical operations, for instance, on numbers, and thus mathematical computations can be performed. The initiator of this system is one of the pioneers of the RSA encryption system, Leonard Adelman, of whom we spoke in the previous section. The molecular calculations are still in their infancy, and we cannot know where these experiments will lead.

Another direction is quantum computers. At this stage the idea is almost completely theoretical. The computational structure of the quantum computer is the same as that of the electronic computer, thus we are back again within the framework of Turing machines. Every quantum computer can be described by a Turing machine, but the speed of a quantum computer will be much greater. The reason is that the states of the quantum computer are based on waves that describe the states of the electrons. The wave characteristics of quantum superposition make situations that are like an assembly of elementary states possible, that is, a combination of the chances of being in one of those states. In that way the number of possible states can be increased. The result is that the states are not a series of binary numbers, 1 or 0, but a row of numbers from among more values. Specifically, a calculation that, in a normal Turing machine requires an exponential number of steps, is likely to require only a polynomial or even linear number of steps in a quantum computer. In the context of the subjects dealt with in this book, it is not necessary to understand the physics underlying the quantum computer. Progress is essentially a matter of engineering, and is also far from implementation. If a quantum computer is realized, the world image regarding the computational difficulty we described in section 53 will change. Problems that currently require an exponential number of steps will immediately come into the P class; in other words, they will be capable of being solved efficiently. An efficient quantum algorithm for solving the problem of factorizing the product of prime numbers already exists, but the mathematical solution is waiting for the construction of the quantum computer.

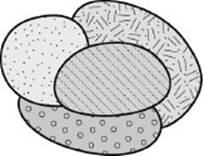

We will conclude with something from a different direction with the potential for a revolution in the way mathematics itself advances. Harnessing the computer for mathematical proofs is nothing new. The most famous example is the proof of the four-color theorem. In section 54 we described the problem: given a two-dimensional map, can it be colored with only four colors? It is easy to draw maps that cannot be colored with only three colors (see the picture). The mathematical problem of whether every two-dimensional map can be colored using four colors was an open question for many years. The problem was already described in the middle of the nineteenth century. Toward the end of that century, in 1879, a solution to the problem was published, but within a few years an error was found. Almost a hundred years passed, and in 1976 two mathematicians from the University of Illinois, Kenneth Appel and Wolfgang Haken presented a full, correct proof, but it was based on calculations performed by a computer. They had to examine many possibilities, about two thousand, and that was done by computer. In this case the computer's contribution was the great saving in time, but it also added to the reliability of the proof. If they would have relied on humans to carry out all the checks, the chance of error would have been much higher. Such computer assistance to mathematics is still technical (and yet some mathematicians are not prepared to accept such technical help as part of a mathematical proof).

Can computers be used to prove mathematical theorems beyond being a computational aid? Doron Zeilberger of Rutgers University in New Jersey claims that the answer is yes. Moreover, he claims, the computer can reveal mathematical facts outside human reach. The type of theorems Zeilberger is working on are mathematical identities. The equality, or identity, (a + b)(a – b) = a2 – b2, was familiar to the Greeks in its abstract state. More complex identities came to light throughout the years of mathematical development, and they do not relate only to numerical identities but also to identities between other mathematical objects. Computer programs that operate on symbolic expressions have existed for many years. Zeilberger used these programs to prove important identities in algebra and used a computer to reveal new identities. He valued the computer's contribution so highly that he added the computer as a coauthor of some of his scientific papers. The computer is named Shalosh B. Ekhad. Clearly Shalosh B. Ekhad coauthored only those papers to which it contributed. On the other hand, it also cooperated with other mathematicians, and it is sole author of several papers. (At the time of writing these lines, there are twenty-three papers listed in Shalosh B. Ekhad's list of publications, and it has cooperated with thirteen authors.) Beyond healthy humor, I think there is something basic in this approach. Zeilberger claims, and that claim cannot be ignored, that the day will come when computers will reveal mathematical theorems that will be difficult for humans to understand.