5 Steps to a 5 AP Psychology, 2014-2015 Edition (2013)

STEP 4. Review the Knowledge You Need to Score High

Chapter 10. Learning

IN THIS CHAPTER

Summary: Did you have to learn how to yawn? Learning is a relatively permanent change in behavior as a result of experience. For a change to be considered learning, it cannot simply have resulted from maturation, inborn response tendencies, or altered states of consciousness. You didn’t need to learn to yawn; you do it naturally. Learning allows you to anticipate the future from past experience and control a complex and ever-changing environment.

This chapter reviews three types of learning: classical conditioning, operant conditioning, and cognitive learning. All three emphasize the role of the environment in the learning process.

Key Ideas

![]() Classical conditioning

Classical conditioning

![]() Classical conditioning paradigm

Classical conditioning paradigm

![]() Classical conditioning learning curve

Classical conditioning learning curve

![]() Strength of conditioning

Strength of conditioning

![]() Classical aversive conditioning

Classical aversive conditioning

![]() Higher-order conditioning

Higher-order conditioning

![]() Operant conditioning

Operant conditioning

![]() Thorndike’s instrumental conditioning

Thorndike’s instrumental conditioning

![]() Operant conditioning training procedures

Operant conditioning training procedures

![]() Operant aversive conditioning

Operant aversive conditioning

![]() Reinforcers

Reinforcers

![]() Operant conditioning training schedules of reinforcement

Operant conditioning training schedules of reinforcement

![]() Superstitious behavior

Superstitious behavior

![]() Cognitive processes in learning

Cognitive processes in learning

![]() The contingency model

The contingency model

![]() Latent learning

Latent learning

![]() Insight

Insight

![]() Social learning

Social learning

![]() Biological factors in learning

Biological factors in learning

![]() Preparedness evolves

Preparedness evolves

![]() Instinctive drift

Instinctive drift

Classical Conditioning

In classical conditioning, the subject learns to give a response it already knows to a new stimulus. The subject associates a new stimulus with a stimulus that automatically and involuntarily brings about the response. A stimulus is a change in the environment that elicits (brings about) a response. A response is a reaction to a stimulus. When food—a stimulus—is placed in our mouths, we automatically salivate—a response. Because we do not need to learn to salivate to food, the food is an unconditional or unconditioned stimulus, and the salivation is an unconditional or unconditioned response. In the early 1900s, Russian physiologist Ivan Pavlov scientifically studied the process by which associations are established, modified, and broken. Pavlov noticed that dogs began to salivate as soon as they saw food (i.e., even before the food was placed in their mouths). The dogs were forming associations between food and events that preceded eating the food. This simple type of learning is called Pavlovian or classical conditioning.

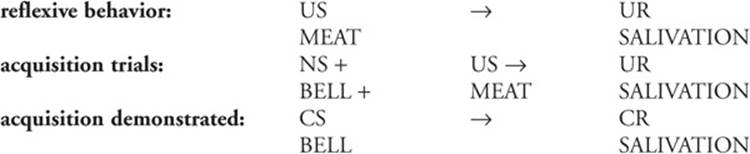

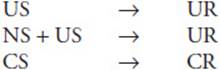

Classical Conditioning Paradigm and the Learning Curve

In classical conditioning experiments, two stimuli, the unconditioned stimulus and neutral stimulus, are paired together. A neutral stimulus (NS) initially does not elicit a response. The unconditioned stimulus (UCS or US)reflexively, or automatically, brings about the unconditioned response (UCR or UR). The conditioned stimulus (CS) is a neutral stimulus (NS) at first, but when paired with the UCS, it elicits the conditioned response (CR). During Pavlov’s training trials, a bell was rung right before the meat was given to the dogs. By repeatedly pairing the food and the bell, acquisition of the conditioned response occurred; the bell alone came to elicit salivation in the dogs. This exemplified the classical conditioning paradigm or pattern—

If you are having trouble figuring out the difference between the UCS and the CS, ask yourself these questions: What did the organism LEARN to respond to? This is the CS. What did the organism respond to REFLEXIVELY? This is the US. The UCR and the CR are usually the same response.

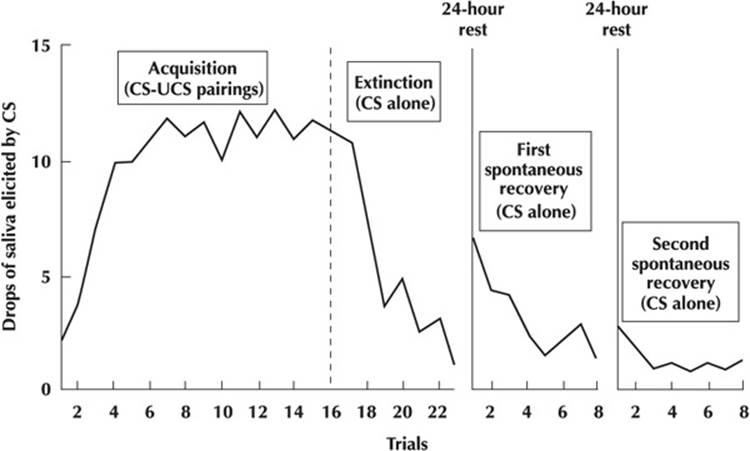

In classical conditioning, the learner is passive. The behaviors learned by association are elicited from the learner. The presentation of the US strengthens or reinforces the behavior. A learning curve for classical conditioning is shown in Figure 10.1.

Figure 10.1 Classical conditioning learning curve.

Strength of Conditioning and Classical Aversive Conditioning

Does the timing of presentation of the NS and US matter in establishing the association for classical conditioning? Different experimental procedures have tried to determine the best presentation time for the NS and the UCS, so that the NS becomes the CS. Delayed conditioning occurs when the NS is presented just before the UCS, with a brief overlap between the two. Trace conditioning occurs when the NS is presented and then disappears before the UCS appears. Simultaneous conditioning occurs when the UCS and NS are paired together at the same time. In backward conditioning, the UCS comes before the NS. In general, delayed conditioning produces the strongest conditioning, trace conditioning produces moderately strong conditioning, simultaneous conditioning produces weak conditioning, and backward conditioning produces no conditioning except in unusual cases. A pregnant woman who vomits hours after eating a burrito often will not eat a burrito again, which is a case of rare backward conditioning.

The strength of the UCS and the saliency of the CS in determining how long acquisition takes have also been researched. In the 1920s, John B. Watson and Rosalie Rayner conditioned a nine-month-old infant known as Baby Albert to fear a rat. Their research would probably be considered unethical today. The UCS in their experiment was a loud noise made by hitting a steel rod with a hammer. Immediately Albert began to cry, a UCR. Two months later, the infant was given a harmless rat to play with. As soon as Albert went to reach for the rat (NS), the loud noise (UCS) was sounded again. Baby Albert began to cry (UCR). A week later, the rat (CS) was reintroduced to Albert and without any additional pairings with the loud noise, Albert cried (CR) and tried to crawl away. Graphs of the learning curve in most classical conditioning experiments show a steady upward trend over many trials until the CS–UCS connection occurs. In most experiments, several trials must be conducted before acquisition occurs, but when an unconditioned stimulus is strong and the neutral stimulus is striking or salient, classical conditioning can occur in a single trial. Because the loud noise (UCS) was so strong and the white rat (CS) was salient, which means very noticeable, the connection between the two took only one trial of pairing for Albert to acquire the new CR of fear of the rat (CS). This experiment is also important because it shows how phobias and other human emotions might develop in humans through classical conditioning. Conditioning involving an unpleasant or harmful unconditioned stimulus or reinforcer, such as this conditioning of Baby Albert, is called aversive conditioning.

Unfortunately, Watson and Rayner did not get a chance to rid Baby Albert of his phobia of the rat. In classical conditioning, if the CS is repeatedly presented without the UCS, eventually the CS loses its ability to elicit the CR. Removal of the UCS breaks the connection and extinction, weakening of the conditioned association, occurs. If Watson had continued to present the rat (CS) and taken away the fear-inducing noise (UCS), eventually Baby Albert would probably have lost his fear of the rat. Although not fully understood by behaviorists, sometimes the extinguished response will show up again later without the repairing of the UCS and CS. This phenomenon is called spontaneous recovery. If Baby Albert had stopped crying whenever the rat appeared, but 2 months later saw another rat and began to cry, he would have been displaying spontaneous recovery. Sometimes a CR needs to be extinguished several times before the association is completely broken.

Generalization occurs when stimuli similar to the CS also elicit the CR without any training. For example, when Baby Albert saw a furry white rabbit, he also showed a fear response. Discrimination occurs when only the CS produces the CR. People and other organisms can learn to discriminate between similar stimuli if the US is consistently paired with only the CS.

Higher-Order Conditioning

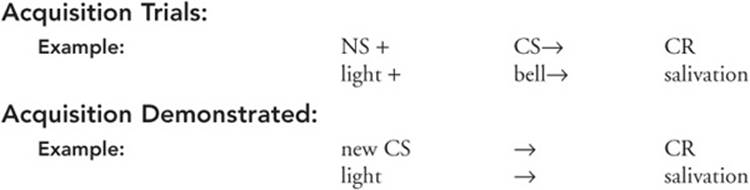

Higher-order conditioning occurs when a well-learned CS is paired with an NS to produce a CR to the NS. In this conditioning, the old CS acts as a UCS. Because the new UCS is not innate, the new CR is not as strong as the original CR. For example, if you taught your dog to salivate to a bell, then flashed a light just before you rang your bell, your dog could learn to salivate to the light without ever having had food associated with it.

This exemplifies the higher-order conditioning paradigm or pattern.

Higher-Order Conditioning

Other applications of classical conditioning include overcoming fears, increasing or decreasing immune functioning, and increasing or decreasing attraction of people or products.

Operant Conditioning

In operant conditioning, an active subject voluntarily emits behaviors and can learn new behaviors. The connection is made between the behavior and its consequence, whether pleasant or not. Many more behaviors can be learned in operant conditioning because they do not rely on a limited number of reflexes. You can learn to sing, dance, or play an instrument as well as to study or clean your room through operant conditioning.

Thorndike’s Instrumental Conditioning

About the same time that Pavlov was classically conditioning dogs, E. L. Thorndike was conducting experiments with hungry cats. He put the cats in “puzzle boxes” and placed fish outside. To get to the fish, the cats had to step on a pedal, which released the door bolt on the box. Through trial and error, the cats moved about the box and clawed at the door. Accidentally at first, they stepped on the pedal and were able to get the reward of the fish. A learning curve shows that the time it took the cats to escape gradually fell. The random movements disappeared until the cat learned that just stepping on the pedal caused the door to open. Thorndike called this instrumental learning, a form of associative learning in which a behavior becomes more or less probable depending on its consequences. He studied how the cats’ actions were instrumental or important in producing the consequences. His Law of Effectstates that behaviors followed by satisfying or positive consequences are strengthened (more likely to occur), while behaviors followed by annoying or negative consequences are weakened (less likely to occur).

B. F. Skinner’s Training Procedures

B. F. Skinner called Thorndike’s instrumental conditioning operant conditioning because subjects voluntarily operate on their environment in order to produce desired consequences. Skinner was interested in the ABCs of 'margin-top:2.4pt;margin-right:0cm;margin-bottom:1.2pt; margin-left:0cm;text-indent:14.4pt;line-height:normal'>Skinner developed four different training procedures: positive reinforcement, negative reinforcement, punishment, and omission training. In positive reinforcement, or reward training, emission of a behavior or response is followed by a reinforcer that increases the probability that the response will occur again. When a rat presses a lever and is rewarded with food, it tends to press the lever again. Being praised after you contribute to a class discussion is likely to cause you to participate again. According to the Premack principle, a more probable behavior can be used as a reinforcer for a less probable one.

“I use the Premack principle whenever I study. After an hour of studying for a test, I watch TV or call a friend. Then I go back to studying. Knowing I’ll get a reward keeps me working.”

—Chris, AP student

Negative reinforcement takes away an aversive or unpleasant consequence after a behavior has been given. This increases the chance that the behavior will be repeated in the future. When a rat presses a lever that temporarily turns off electrical shocks, it tends to press the lever again. If you have a bad headache and then take an aspirin that makes it disappear, you are likely to take aspirin the next time you have a headache. Both positive and negative reinforcement bring about desired responses, and so both increase or strengthen those behaviors.

In punishment training, a learner’s response is followed by an aversive consequence. Because this consequence is unwanted, the learner stops exhibiting that behavior. A child who gets spanked for running into the street stays on the grass or sidewalk. Punishment should be immediate so that the consequence is associated with the misbehavior, strong enough to stop the undesirable behavior, and consistent. Psychologists caution against the overuse of punishment because it does not teach the learner what he or she should do, suppresses rather than extinguishes behavior, and may evoke hostility or passivity. The learner may become aggressive or give up. An alternative to punishment is omission training. In this training procedure, a response by the learner is followed by taking away something of value from the learner. Both punishment and omission training decrease the likelihood of the undesirable behavior, but in omission training the learner can change this behavior and get back the positive reinforcer. One form of omission training used in schools is called time-out, in which a disruptive child is removed from the classroom until the child changes his or her behavior. The key to successful omission training is knowing exactly what is rewarding and what isn’t for each individual.

Operant Aversive Conditioning

Negative reinforcement is often confused with punishment. Both are forms of aversive conditioning, but negative reinforcement takes away aversive stimuli—you get rid of something you don’t want. By putting on your seat belt, an obnoxious buzz or beep is ended. You quickly learn to put your seat belt on when you hear that sound. There are two types of negative reinforcement—avoidance and escape. Avoidance behavior takes away the aversive stimulus before it begins. A dog jumps over a hurdle to avoid an electric shock, for example. Escape behavior takes away the aversive stimulus after it has already started. The dog gets shocked first and then he escapes it by jumping over the hurdle. Learned helplessness is the feeling of futility and passive resignation that results from the inability to avoid repeated aversive events. Later, if it becomes possible to avoid or escape the aversive stimuli, it is unlikely that the learner will respond. Sometimes in contrast to negative reinforcement, punishment comes as the result of your exhibiting a behavior that is followed by aversive consequences. You get something you don’t want. By partying instead of studying before a test, you get a bad grade. That grade could result in your failing a course. You learn to stop doing behaviors that bring about punishment, but learn to continue behaviors that are negatively reinforced.

Reinforcers

A primary reinforcer is something that is biologically important and, thus, rewarding. Food and drink are examples of primary reinforcers. A secondary reinforcer is something neutral that, when associated with a primary reinforcer, becomes rewarding. Gold stars, points, money, and tokens are all examples of secondary reinforcers. A generalized reinforcer is a secondary reinforcer that can be associated with a number of different primary reinforcers. Money is probably the best example because you can get tired of one primary reinforcer like candy, but money can be exchanged for any type of food, another necessity, entertainment, or a luxury item you would like to buy. The operant training system, called a token economy, has been used extensively in institutions such as mental hospitals and jails. Tokens or secondary reinforcers are used to increase a list of acceptable behaviors. After so many tokens have been collected, they can be exchanged for special privileges like snacks, movies, or weekend passes.

Applied behavior analysis, also called behavior modification, is a field that applies the behavioral approach scientifically to solve individual, institutional, and societal problems. Data are gathered both before and after the program is established. For example, training programs have been designed to change employee behavior by reinforcing desired worker behavior, which increases worker motivation.

Teaching a New Behavior

What is the best way to teach and maintain desirable behaviors through operant conditioning? Shaping, positively reinforcing closer and closer approximations of the desired behavior, is an effective way of teaching a new behavior. Each reward comes when the learner gets a bit closer to the final goal behavior. When a little boy is being toilet trained, the child may get rewarded after just saying that he needs to go. The next time he can get rewarded after sitting on the toilet. Eventually, he gets rewarded only after urinating or defecating in the toilet. For a while, reinforcing this behavior every time firmly establishes the behavior. Chaining is used to establish a specific sequence of behaviors by initially positively reinforcing each behavior in a desired sequence and then later rewarding only the completed sequence. Animal trainers at SeaWorld often have dolphins do an amazing series of different behaviors, like swimming the length of a pool, jumping through a hoop, and then honking a horn before they are rewarded with fish. Generally, reinforcement or punishment that occurs immediately after a behavior has a stronger effect than when it is delayed.

Schedules of Reinforcement

A schedule refers to the training program that states how and when reinforcers will be given to the learner. Continuous reinforcement is the schedule that provides reinforcement every time the behavior is exhibited by the organism. Although continuous reinforcement encourages acquisition of a new behavior, not reinforcing the behavior even once or twice could result in extinction of the behavior. For example, if a disposable flashlight always works, when you click it on once or twice and it doesn’t work, you expect that it has quit working and throw it away.

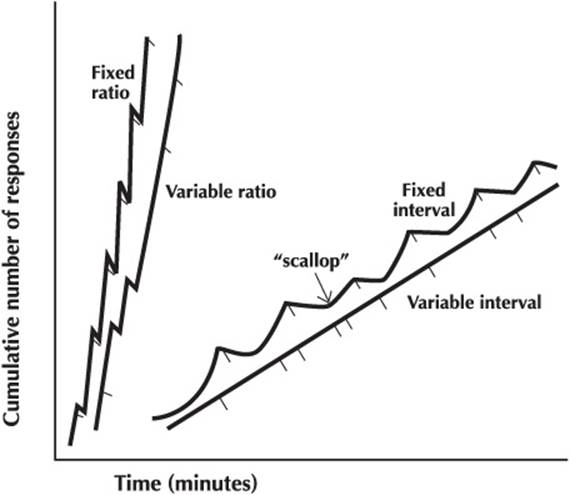

Reinforcing behavior only some of the time, which is using partial reinforcement or an intermittent schedule, maintains behavior better than continuous reinforcement. Partial reinforcement schedules based on the number of desired responses are ratio schedules. Schedules based on time are interval schedules. Fixed ratio schedules reinforce the desired behavior after a specific number of responses have been made. For example, every three times a rat presses a lever in a Skinner box, it gets a food pellet. Fixed interval schedules reinforce the first desired response made after a specific length of time. Fixed interval schedules result in lots of behavior as the time for reinforcement approaches, but little behavior until the next time for reinforcement approaches. For example, the night before an elementary school student gets a weekly spelling test, she will study her spelling words, but not the night after (see Figure 10.2). In a variable ratio schedule, the number of responses needed before reinforcement occurs changes at random around an average. For example, if another of your flashlights works only after clicking it a number of times and doesn’t light on the first click, you try clicking it again and again. Because your expectation is different for this flashlight, you are more likely to keep exhibiting the behavior of clicking it. Using slot machines in gambling casinos, gamblers will pull the lever hundreds of times as the anticipation of the next reward gets stronger. On a variable interval schedule, the amount of time that elapses before reinforcement of the behavior changes. For example, if your French teacher gives pop quizzes, you never know when to expect them, so you study every night.

Figure 10.2 Partial reinforcement schedules.

fixed ratio schedule—know how much behavior for reinforcement

fixed interval schedule—know when behavior is reinforced

variable ratio schedule—how much behavior for reinforcement changes

variable interval schedule—when behavior is reinforced changes

Superstitious Behavior

Have you ever wondered how people develop superstitions? B. F. Skinner accounted for the development of superstitious behaviors in partial reinforcement schedule experiments he performed with pigeons. He found that if food pellets were delivered when a pigeon was performing some idiosyncratic behavior, the pigeon would tend to repeat the behavior to get more food. If food pellets were again delivered when the pigeon repeated the behavior, the pigeon would tend to repeat the behavior over and over, thus indicating the development of “superstitious behavior.” Although there was a correlation between the idiosyncratic behavior and the appearance of food, there was no causal relationship between the superstitious behavior and delivery of the food to the pigeon. But the pigeons acted as if there were. People who play their “lucky numbers” when they gamble or wear their “lucky jeans” to a test may have developed superstitions from the unintended reinforcement of unimportant behavior, too.

Cognitive Processes in Learning

John B. Watson and B. F. Skinner typified behaviorists. They studied only behaviors they could observe and measure—the ABCs of 'margin-top:9.6pt;margin-right:0cm;margin-bottom:4.8pt; margin-left:0cm;text-indent:.1pt;line-height:normal'>The Contingency Model

Cognitivists interpret classical and operant conditioning differently. Beyond making associations between stimuli and learning from rewards and punishment, cognitive theorists believe that humans and other animals are capable of forming expectations and consciously being motivated by rewards. Pavlov’s view of classical conditioning is called the contiguity model. He believed that the close time between the CS and the US was most important for making the connection between the two stimuli and that the CS eventually substituted for the US. Cognitivist Robert Rescorla challenged this viewpoint, suggesting a contingency model of classical conditioning that the CS tells the organism that the US will follow. Although the close pairing in time between the two stimuli is important, the key is how well the CS predicts the appearance of the UCS. Another challenge to Pavlov’s model is what Leon Kamin calls the blocking effect. Kamin used a rat and paired a light (NS) with a tone (CS). The rat had already been classically conditioned with shock (UCS) to produce fear (CR). He found that he was unable to produce conditioned fear to the light alone. He argued that the rat had already learned to associate the signal for shock with the tone so that the light offered no new information. The conditioning effect of the light was blocked.

Although reinforcement or punishment that occurs immediately after a behavior has a stronger effect than delayed consequences, timing sometimes is less critical for human behavior. The ability to delay gratification—forgo an immediate but smaller reward for a postponed greater reward—often affects decisions. Saving money for college, a car, or something else special rather than spending it immediately is an example. People vary in the ability to delay gratification, which partially accounts for the inability of some people to quit smoking or lose weight.

Latent Learning

Cognitive theorists also see evidence of thinking in operant conditioning. Latent learning is defined as learning in the absence of rewards. Edward Tolman studied spatial learning by conducting maze experiments with rats under various conditions. An experimental group of rats did not receive a reward for going through a maze for 10 days, while another group did. The rewarded group made significantly fewer errors navigating the maze. On day 11, both groups got rewards. On day 12, the previously unrewarded group navigated the maze as well as the rewarded group, demonstrating latent learning. He hypothesized that previously unrewarded rats formed a cognitive map or mental picture of the maze during the early nonreinforced trials. Once they were rewarded, they expected future rewards and, thus, were more motivated to improve.

Insight

Have you ever walked out of a class after leaving a problem blank on your test and suddenly the answer popped into your head? Insight is the sudden appearance of an answer or solution to a problem. Wolfgang Kohler exposed chimpanzees to new learning tasks and concluded that they learned by insight. In one study, a piece of fruit was placed outside Sultan’s cage beyond his reach. A short stick was inside the cage. After several attempts using the stick to reach the fruit were unsuccessful, Sultan stopped trying and stared at the fruit. Suddenly Sultan bolted up and, using the short stick, raked in a longer stick outside his cage. By using the second stick, he was able to get the fruit. No conditioning had been used.

Social Learning

A type of social cognitive learning is called modeling or observational learning, which occurs by watching the behavior of a model. For example, if you want to learn a new dance step, first you watch someone else do it. Next you try to imitate what you saw the person do. The cognitive aspect comes in when you think through how the person is moving various body parts and, keeping that in mind, try to do it yourself. Learning by observation is adaptive, helping us save time and avoid danger. Albert Bandura, who pioneered the study of observational learning, outlined four steps in the process: attention, retention, reproduction, and motivation. In his famous experiment using inflated “bobo” dolls, he showed three groups of children a scene where a model kicked, punched, and hit the bobo doll. One group saw the model rewarded, another group saw no consequences, the third group saw the model punished. Each child then went to a room with a bobo doll and other toys. The children who saw the model punished kicked, punched, and hit the bobo doll less than the other children. Later, when they were offered rewards to imitate what they had seen the model do, that group of children was as able to imitate the behavior as the others. Further research indicated that viewing violence reduces our sensitivity to the sight of violence, increases the likelihood of aggressive behavior, and decreases our concerns about the suffering of victims. Feeling pride or shame in ourselves for doing something can be important internal reinforcers that influence our behavior.

Abstract learning goes beyond classical and operant conditioning and shows that animals such as pigeons and dolphins can understand simple concepts and apply simple decision rules. In one experiment, pigeons pecked at different-colored squares. The pigeon was first shown a red square and then two squares—one red and the other green. In matching-to-sample problems, pecking the red square, or “same,” was rewarded. In oddity tasks, pecking the green square, or “different,” would bring the reward. To prove this wasn’t merely operant conditioning, the stimuli were changed, and in 80% of the trials, the pigeons proved successful in making the transfer of “same” or “different.”

Biological Factors in Learning

Mirror neurons in the premotor cortex and other portions of the temporal and parietal lobes provide a biological basis for observational learning. The neurons are activated not only when you perform an action, but also when you observe someone else perform a similar action. These neurons transform the sight of someone else’s action into the motor program you would use to do the same thing and to experience similar sensations or emotions, the basis for empathy.

Preparedness Evolves

Taste aversions are an interesting biological application of classical conditioning. A few hours after your friend ate brussels sprouts for the first time, she vomited. Although a stomach virus (UCS) caused the vomiting (UCR), your friend refuses to eat brussels sprouts again. She developed a conditioned taste aversion, an intense dislike and avoidance of a food because of its association with an unpleasant or painful stimulus through backward conditioning. According to some psychologists, conditioned taste aversions are probably adaptive responses of organisms to foods that could sicken or kill them. Evolutionarily successful organisms are biologically predisposed or biologically prepared to associate illness with bitter and sour foods. Preparedness means that through evolution, animals are biologically predisposed to easily learn behaviors related to their survival as a species, and that behaviors contrary to an animal’s natural tendencies are learned slowly or not at all. People are more likely to learn to fear snakes or spiders than flowers or happy faces. John Garcia and colleagues experimented with rats exposed to radiation, and others exposed to poisons. They found that rats developed conditioned taste aversions even when they did not become nauseated until hours after being exposed to a taste, which is sometimes referred to as the Garcia effect. Similarly, cancer patients undergoing chemotherapy develop loss of appetite. They also found that there are biological constraints on the ease with which particular stimuli can be associated with particular responses. Rats have a tendency to associate nausea and dizziness with tastes, but not with sights and sounds. Rats also tend to associate pain with sights and sounds, but not with tastes.

Instinctive Drift

Sometimes, operantly conditioned animals failed to behave as expected. Wild rats already conditioned in Skinner boxes sometimes reverted to scratching and biting the lever. In different experiments, Keller and Marian Breland found that stimuli that represented food were treated as actual food by chickens and raccoons. The Brelands attributed this to the strong evolutionary history of the animals that overrode conditioning. They called this instinctive drift—a conditioned response that drifts back toward the natural (instinctive) behavior of the organism. Wild animal trainers must stay vigilant even after training their animals because the animals may revert to dangerous behaviors.

![]() Review Questions

Review Questions

Directions: For each item, choose the letter of the choice that best completes the statement or answers the question.

1. Once Pavlov’s dogs learned to salivate to the sound of a tuning fork, the tuning fork was a(n)

(A) unconditioned stimulus

(B) neutral stimulus

(C) conditioned stimulus

(D) unconditioned response

(E) conditioned response

2. Shaping is

(A) a pattern of responses that must be made before classical conditioning is completed

(B) rewarding behaviors that get closer and closer to the desired goal behavior

(C) completing a set of behaviors in succession before a reward is given

(D) giving you chocolate pudding to increase the likelihood you will eat more carrots

(E) inhibition of new learning by previous learning

3. John loves to fish. He puts his line in the water and leaves it there until he feels a tug. On what reinforcement schedule is he rewarded?

(A) continuous reinforcement

(B) fixed ratio

(C) fixed interval

(D) variable ratio

(E) variable interval

4. Chimpanzees given tokens for performing tricks were able to put the tokens in vending machines to get grapes. The tokens acted as

(A) primary reinforcers

(B) classical conditioning

(C) secondary reinforcers

(D) negative reinforcers

(E) unconditioned reinforcers

5. Which of the following best reflects negative reinforcement?

(A) Teresa is scolded when she runs through the house yelling.

(B) Lina is not allowed to watch television until after she has finished her homework.

(C) Greg changes his math class so he doesn’t have to see his old girlfriend.

(D) Aditya is praised for having the best essay in the class.

(E) Alex takes the wrong medicine and gets violently ill afterwards.

6. Watson and Rayner’s classical conditioning of “Little Albert” was helpful in explaining that

(A) some conditioned stimuli do not generalize

(B) human emotions such as fear are subject to classical conditioning

(C) drug dependency is subject to classical as well as operant conditioning

(D) small children are not as easily conditioned as older children

(E) fear of rats and rabbits are innate responses previously undiscovered

7. Jamel got very sick after eating some mushrooms on a pizza at his friend’s house. He didn’t know that he had a stomach virus at the time, blamed his illness on the mushrooms, and refused to eat them again. Which of the following is the unconditioned stimulus for his taste aversion to mushrooms?

(A) pizza

(B) stomach virus

(C) mushrooms

(D) headache

(E) aversion to mushrooms

8. If a previous experience has given your pet the expectancy that nothing it does will prevent an aversive stimulus from occurring, it will likely

(A) be motivated to seek comfort from you

(B) experience learned helplessness

(C) model the behavior of other pets in hopes of avoiding it

(D) seek out challenges like this in the future to disprove the expectation

(E) engage in random behaviors until one is successful in removing the stimulus

9. While readying to take a free-throw shot, you suddenly arrive at the answer to a chemistry problem you’d been working on several hours before. This is an example of

(A) insight

(B) backward conditioning

(C) latent learning

(D) discrimination

(E) the Premack Principle

10. If the trainer conditions the pigeon to peck at a red circle and then only gives him a reward if he pecks at the green circle when both a red and green circle appear, the pigeon is demonstrating

(A) matching-to-sample generalization

(B) abstract learning

(C) intrinsic motivation

(D) insight

(E) modeling

11. Latent learning is best described by which of the following?

(A) innate responses of an organism preventing new learning and associations

(B) unconscious meaning that is attributed to new response patterns

(C) response patterns that become extinguished gradually over time

(D) delayed responses that occur when new stimuli are paired with familiar ones

(E) learning that occurs in the absence of rewards

12. Rats were more likely to learn an aversion to bright lights and noise with water if they were associated with electric shocks rather than with flavors or poisoned food. This illustrates

(A) insight

(B) preparedness

(C) extinction

(D) observational learning

(E) generalization

13. Which of the following responses is not learned through operant conditioning?

(A) a rat learning to press a bar to get food

(B) dogs jumping over a hurdle to avoid electric shock

(C) fish swimming to the top of the tank when a light goes on

(D) pigeons learning to turn in circles for a reward

(E) studying hard for good grades on tests

14. Spontaneous recovery refers to the

(A) reacquisition of a previously learned behavior

(B) reappearance of a previously extinguished CR after a rest period

(C) return of a behavior after punishment has ended

(D) tendency of newly acquired responses to be intermittent at first

(E) organism’s tendency to forget previously learned responses, but to relearn them more quickly during a second training period

![]() Answers and Explanations

Answers and Explanations

1. C—The tuning fork is the CS and salivation is the CR. Pavlov’s dog learned to salivate to the tuning fork.

2. B—The definition of shaping is reinforcing behaviors that get closer and closer to the goal.

3. E—Variable interval is correct. John doesn’t know when a fish will be on his line. Catching fish is unrelated to the number of times he pulls in his line, but rather to when he pulls in his line.

4. C—Tokens serve as secondary reinforcers the chimps learned to respond to positively. They were connected with the primary reinforcer—grapes.

5. C—Greg transferred from the class to avoid having to see his old girlfriend. Avoidance is one type of negative reinforcement that takes away something aversive.

6. B—Watson and Raynor’s experiment with Little Albert showed emotional learning and phobias may be learned through classical conditioning.

7. B—The stomach virus is the UCS that automatically caused him to get sick. The mushrooms are the CS which he learned to avoid because of the association with the virus that caused his sickness.

8. B—Learned helplessness occurs when an organism has the experience that nothing it does will prevent an aversive stimulus from occurring.

9. A—Insight learning is the sudden appearance of a solution to a problem.

10. B—The animal showed understanding of a concept when it was able to tell the difference between the red and green circles, and only pecked at the green circle to get a reward.

11. E—Latent learning is defined as learning in the absence of rewards.

12. B—The rats were biologically prepared to associate two external events, like shock and the lights and sounds together.

13. C—The fish swimming to the top of the tank when the light goes on shows classical conditioning.

14. B—Spontaneous recovery occurs when a conditioned response is extinguished, but later reappears when the CS is present again without retraining.

![]() Rapid Review

Rapid Review

Learning—a relatively permanent change in behavior as a result of experience (nurture).

Classical conditioning—learning which takes place when two or more stimuli are presented together; an unconditioned stimulus is paired repeatedly with a neutral stimulus until it acquires the capacity to elicit a similar response. The subject learns to give a response it already knows to a new stimulus. Terms and concepts associated with classical conditioning include:

• Stimulus—a change in the environment that elicits (brings about) a response.

• Neutral stimulus (NS)—a stimulus that initially does not elicit a response.

• Unconditioned stimulus (UCS or US) reflexively, or automatically, brings about the unconditioned response.

• Unconditioned response (UCR or UR)—an automatic, involuntary reaction to an unconditioned stimulus.

• Conditioned stimulus (CS)—a neutral stimulus (NS) at first, but when paired with the UCS, it elicits the conditioned response (CR).

• Acquisition—in classical conditioning, learning to give a known response to a new stimulus, the neutral stimulus

• Extinction—repeatedly presenting a CS without a UCS leads to return of the NS.

• Spontaneous recovery—after extinction, and without training, the previous CS suddenly elicits the CR again temporarily.

• Generalization—stimuli similar to the CS also elicit the CR without training.

• Discrimination—the ability to tell the difference between stimuli so that only the CS elicits the CR.

• Higher-order conditioning—classical conditioning in which a well-learned CS is paired with an NS to produce a CR to the NS.

Aversive conditioning—learning involving an unpleasant or harmful stimulus or reinforcer.

Avoidance behavior takes away the unpleasant stimulus before it begins.

Escape behavior takes away the unpleasant stimulus after it has already started.

Instrumental learning—associative learning in which a behavior becomes more or less probable depending on its consequences.

Law of Effect—behaviors followed by positive consequences are strengthened while behaviors followed by annoying or negative consequences are weakened.

Operant conditioning—learning that occurs when an active learner performs certain voluntary behavior, and the consequences of the behavior (pleasant or unpleasant) determine the likelihood of its recurrence. Terms and concepts associated with operant conditioning include:

• Positive reinforcement—a rewarding consequence that follows a voluntary behavior thereby increasing the probability the behavior will be repeated.

• Primary reinforcer—something that is biologically important and, thus, rewarding.

• Secondary reinforcer—something rewarding because it is associated with a primary reinforcer.

• Generalized reinforcer—secondary reinforcer associated with a number of different primary reinforcers.

• Premack principle—a more probable behavior can be used as a reinforcer for a less probable one.

• Negative reinforcement—removal of an aversive consequence that follows a voluntary behavior thereby increasing the probability the behavior will be repeated; two types are escape and avoidance.

• Punishment—an aversive consequence that follows a voluntary behavior thereby decreasing the probability the behavior will be repeated.

• Omission training—removal of a rewarding consequence that follows a voluntary behavior thereby decreasing the probability the behavior will be repeated.

• Shaping—positively reinforcing closer and closer approximations of a desired behavior to teach a new behavior.

•Chaining establishes a specific sequence of behaviors by initially positively reinforcing each behavior in a desired sequence and then later rewarding only the completed sequence.

A reinforcement schedule states how and when reinforcers will be given to the learner.

• Continuous reinforcement—schedule that provides reinforcement following the particular behavior every time it is exhibited; best for acquisition of a new behavior.

• Partial reinforcement or intermittent schedule—occasional reinforcement of a particular behavior; produces response that is more resistant to extinction.

• Fixed ratio—reinforcement of a particular behavior after a specific number of responses.

• Fixed interval—reinforcement of the first particular response made after a specific length of time.

• Variable ratio—reinforcement of a particular behavior after a number of responses that changes at random around an average number.

• Variable interval—reinforcement of the first particular response made after a length of time that changes at random around an average time period.

Superstitious behaviors can result from unintended reinforcement of unimportant behavior.

Behavior modification—a field that applies the behavioral approach scientifically to solve problems (applied behavior analysis).

Token economy—operant training system that uses secondary reinforcers to increase appropriate behavior; learners can exchange secondary reinforcers for desired rewards.

(Biological) Preparedness—predisposition to easily learn behaviors related to survival of the species.

Instinctive drift—a conditioned response that moves toward the natural behavior of the organism.

Cognitivists interpret classical and operant conditioning differently from behaviorists.

Cognitivists reject Pavlov’s contiguity theory that classical conditioning is based on the association in time of the CS prior to the UCS.

Cognitivist Richard Rescorla’s contingency theory says that the key to classical conditioning is how well the CS predicts the appearance of the UCS.

Latent learning—learning in the absence of rewards.

Insight—the sudden appearance of an answer or solution to a problem.

Observational learning—learning that occurs by watching the behavior of a model.