Advanced Calculus of Several Variables (1973)

Part I. Euclidean Space and Linear Mappings

Chapter 6. DETERMINANTS

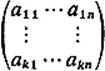

It is clear by now that a method is needed for deciding whether a given n-tuple of vectors a1, . . . , an in ![]() n are linearly independent (and therefore constitute a basis for

n are linearly independent (and therefore constitute a basis for ![]() n). We discuss in this section the method of determinants. The determinant of an n × n matrix A is a real number denoted by det A or

n). We discuss in this section the method of determinants. The determinant of an n × n matrix A is a real number denoted by det A or ![]() A

A![]() .

.

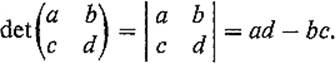

The student is no doubt familiar with the definition of the determinant of a 2 × 2 or 3 × 3 matrix. If A is 2 × 2, then

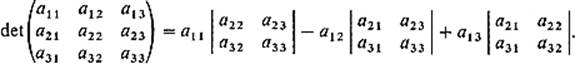

For 3 × 3 matrices we have expansions by rows and columns. For example, the formula for expansion by the first row is

Formulas for expansions by rows or columns are greatly simplified by the following notation. If A is an n × n matrix, let Aij denote the (n − 1) × (n − 1) submatrix obtained from A by deletion of the ith row and the jth column of A. Then the above formula can be written

![]()

The formula for expansion of the n × n matrix A by the ith row is

![]()

while the formula for expansion by the jth column is

![]()

For example, with n = 3 and j = 2, (2) gives

![]()

as the expansion of a 3 × 3 matrix by its second column.

One approach to the problem of defining determinants of matrices is to define the determinant of an n × n matrix by means of formulas (1) and (2), assuming inductively that determinants of (n − 1) × (n − 1) matrices have been previously defined. Of course it must be verified that expansions along different rows and/or columns give the same result. Instead of carrying through this program, we shall state without proof the basic properties of determinants (I–IV below), and then proceed to derive from them the specific facts that will be needed in subsequent chapters. For a development of the theory of determinants, including proofs of these basic properties, the student may consult the chapter on determinants in any standard linear algebra textbook.

In the statement of Property I, we are thinking of a matrix A as being a function of the column vectors of A, det A = D(A1, . . . , An).

(I)There exists a unique (that is, one and only one) alternating, multilinear function D, from n-tuples of vectors in ![]() n to real numbers, such that D(e1, . . . , en) = 1.

n to real numbers, such that D(e1, . . . , en) = 1.

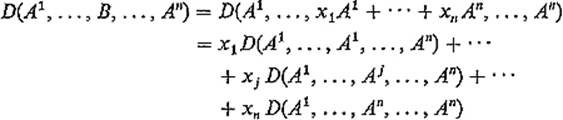

The assertion that D is multilinear means that it is linear in each variable separately. That is, for each i = 1, . . . , n,

![]()

The assertion that D is alternating means that D(a1, . . . , an) = 0 if ai = aj for some i ≠ j. In Exercises 6.1 and 6.2, we ask the student to derive from the alternating multilinearity of D that

![]()

![]()

and

![]()

Given the alternating multilinear function provided by (I), the determinant of the n × n matrix A can then be defined by

![]()

where A1, . . . , An are as usual the column vectors of A. Then (4) above says that the determinant of A is multiplied by r if some column of A is multiplied by r, (5) that the determinant of A is unchanged if a multiple of one column is added to another column, while (6) says that the sign of det A is changed by an interchange of any two columns of A. By virtue of the following fact, the word “column” in each of these three statements may be replaced throughout by the word “row.”

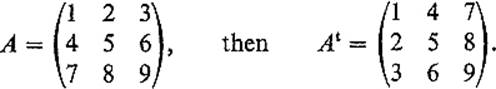

(II)The determinant of the matrix A is equal to that of its transpose At.

The transpose At of the matrix A = (aij) is obtained from A by interchanging the elements aij and aji, for each i and j. Another way of saying this is that the matrix A is reflected through its principal diagonal. We therefore write At = (aji) to state the fact that the element in the ith row and jth column of At is equal to the one in the jth row and ith column of A. For example, if

Still another way of saying this is that At is obtained from A by changing the rows of A to columns, and the columns to rows.

(III)The determinant of a matrix can be calculated by expansions along rows and columns, that is, by formulas (1) and (2) above.

In a systematic development, it would be proved that formulas (1) and (2) give definitions of det A that satisfy the conditions of Property I and therefore, by the uniqueness of the function D, each must agree with the definition in (7) above.

The fourth basic property of determinants is the fact that the determinant of the product of two matrices is equal to the product of their determinants.

(IV)det AB = (det A)(det B)

As an application, recall that the n × n matrix B is said to be an inverse of the n × n matrix A if and only if AB = BA = I, where I denotes the n × n identity matrix. In this case we write B = A−1 (the matrix A−1 is unique if it exists at all—(see Exercise 6.3), and say A is invertible. Since the fact that D(e1, . . . , en) = 1 means that det I = 1, (IV) gives (det A)(det A−1) = 1 ≠ 0. So a necessary condition for the existence of A−1 is that det A ≠ 0. We prove in Theorem 6.3 that this condition is also sufficient. The n × n matrix A is called nonsingular if det A ≠ 0, singular if det A = 0.

We can now give the determinant criterion for the linear independence of n vectors in ![]() n.

n.

Theorem 6.1The n vectors a1, . . . , an in ![]() n are linearly independent if and only if

n are linearly independent if and only if

![]()

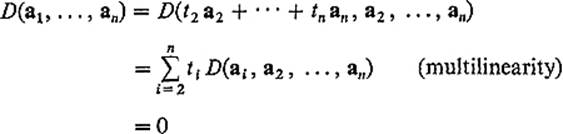

PROOFSuppose first that they are linearly dependent; we then want to show that D(a1, . . . , an) = 0. Some one of them is then a linear combination of the others; suppose, for instance, that,

![]()

Then

because each D(ai, a2, . . . , an) = 0, i = 2, . . . , n, since D is alternating.

Conversely, suppose that the vectors a1, . . . , an are linearly independent. Let A be the n × n matrix whose column vectors are a1, . . . , an, and define the linear mapping L : ![]() n →

n → ![]() n by L(x) = Ax for each (column) vector

n by L(x) = Ax for each (column) vector ![]() . Since L(ei) = ai for each i = 1, . . . , n, Im L =

. Since L(ei) = ai for each i = 1, . . . , n, Im L = ![]() n and L is one-to-one by Theorem 5.1. It therefore has a linear inverse mapping L−1 :

n and L is one-to-one by Theorem 5.1. It therefore has a linear inverse mapping L−1 : ![]() n →

n → ![]() n (Exercise 5.3); denote by B the matrix of L−1. Then AB = BA = I by Theorem 4.2, so it follows from the remarks preceding the statement of the theorem that det A ≠ 0, as desired.

n (Exercise 5.3); denote by B the matrix of L−1. Then AB = BA = I by Theorem 4.2, so it follows from the remarks preceding the statement of the theorem that det A ≠ 0, as desired.

![]()

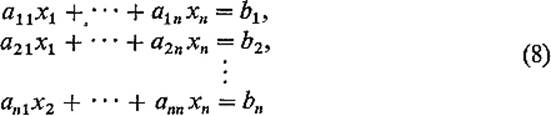

Determinants also have important applications to the solution of linear systems of equations. Consider the system

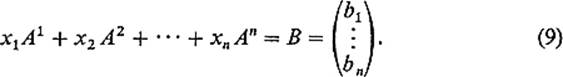

of n equations in n unknowns. In terms of the column vectors of the coefficient matrix A = (aij), (8) can be rewritten

The situation then depends upon whether A is singular or nonsingular. If A is singular then, by Theorem 6.1, the vectors A1, . . . , An are linearly dependent, and therefore generate a proper subspace V of ![]() n. If

n. If ![]() , then (9)clearly has no solution, while if

, then (9)clearly has no solution, while if ![]() , it is easily seen that (9) infinitely many solutions (Exercise 6.5).

, it is easily seen that (9) infinitely many solutions (Exercise 6.5).

If the matrix A is nonsingular then, by Theorem 6.1, the vectors A1, . . . , An constitute a basis for ![]() n, so Eq. (9) has exactly one solution. The formula given in the following theorem, for this unique solution of (8) or (9), is known as Cramer's Rule.

n, so Eq. (9) has exactly one solution. The formula given in the following theorem, for this unique solution of (8) or (9), is known as Cramer's Rule.

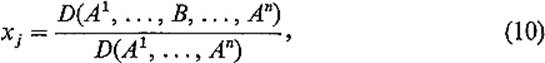

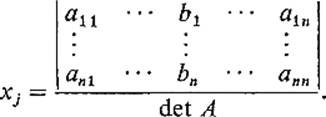

Theorem 6.2Let A be a nonsingular n × n matrix and let B be a column vector. If (x1, . . . , xn) is the unique solution of (9), then, for each j = 1, . . . , n,

where B occurs in the jth place instead of Aj. That is,

PROOFIf x1A1 + · · · + xnAn = B, then

by the multilinearity of D. Then each term of this sum except the jth one vanishes by the alternating property of D, so

![]()

But, since det A ≠ 0, this is Eq. (10).

![]()

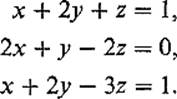

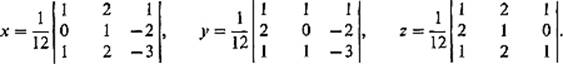

Example 1 Consider the system

Then det A = 12 ≠ 0, so (10) gives the solution

We have noted above that an invertible n × n matrix A must be nonsingular. We now prove the converse, and give an explicit formula for A−1.

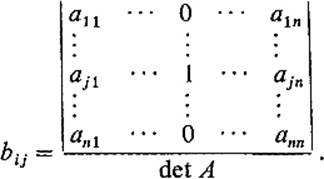

Theorem 6.3Let A = (aij) be a nonsingular n × n matrix. Then A is invertible, with its inverse matrix B = (bij) given by

![]()

where the jth unit column vector occurs in the ith place.

PROOFLet X = (xij) denote an unknown n × n matrix. Then, from the definition of matrix products, we find that AX = I if and only if

![]()

for each j = 1, . . . , n. For each fixed j, this is a system of n linear equations in the n unknowns x1j, . . . , xnj, with coefficient matrix A. Since A is nonsingular, Cramer's rule gives the solution

![]()

This is the formula of the theorem, so the matrix B defined by (11) satisfies AB = I.

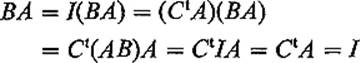

It remains only to prove that BA = I also. Since det At = det A ≠ 0, the method of the preceding paragraph gives a matrix C such that AtC = I. Taking transposes, we obtain CtA = I (see Exercise 6.4). Therefore

as desired.

![]()

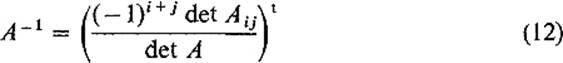

Formula (11) can be written

Expanding the numerator along the ith column in which Ej appears, and noting the reversal of subscripts which occurs because the 1 is in the jth row and ith column, we obtain

![]()

This gives finally the formula

for the inverse of the nonsingular matrix A.

Exercises

6.1Deduce formulas (4) and (5) from Property I.

6.2Deduce formula (6) from Property I. Hint: Compute D(a1, . . . , ai + aj, . . . , ai + aj, . . . , an), where ai + aj appears in both the ith place and the jth place.

6.3Prove that the inverse of an n × n matrix is unique. That is, if B and C are both inverses of the n × n matrix A, show that B = C. Hint: Look at the product CAB.

6.4If A and B are n × n matrices, show that (AB)t = Bt At.

6.5If the linearly dependent vectors a1, . . . , an generate the subspace V of ![]() n, and

n, and ![]() , show that b can be expressed in infinitely many ways as a linear combination of a1, . . . , an.

, show that b can be expressed in infinitely many ways as a linear combination of a1, . . . , an.

6.6Suppose that A = (aij) is an n × n triangular matrix in which all elements below the principal diagonal are zero; that is, aij = 0 if i > j. Show that det A = a11a22 · · · ann. In particular, this is true if A is a diagonal matrix in which all elements off the principal diagonal are zero.

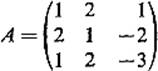

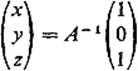

6.7Compute using formula (12) the inverse A−1 of the coefficient matrix

of the system of equations in Example 1. Then show that the solution

agrees with that found using Cramer's rule.

6.8Let ai = (ai1, ai2, . . . , ain), i = 1, . . . , k < n, be k linearly dependent vectors in ![]() n. Then show that every k × k submatrix of the matrix

n. Then show that every k × k submatrix of the matrix

has zero determinant.

6.9If A is an n × n matrix, and x and y are (column) vectors in ![]() n, show that (Ax)·y = x·(Aty), and then that (Ax)·(Ay) = x·(AtAy).

n, show that (Ax)·y = x·(Aty), and then that (Ax)·(Ay) = x·(AtAy).

6.10The n × n matrix A is said to orthogonal if and only if AAt = I, so A is invertible with A−1 = At. The linear transformation L: ![]() n →

n → ![]() n is said to be orthogonal if and only if its matrix is orthogonal. Use the identity of the previous exercise to show that the linear transformation L is orthogonal if and only if it is inner product preserving (see Exercise 4.6)

n is said to be orthogonal if and only if its matrix is orthogonal. Use the identity of the previous exercise to show that the linear transformation L is orthogonal if and only if it is inner product preserving (see Exercise 4.6)

6.11(a)Show that the n × n matrix A is orthogonal if and only if its column vectors are othonormal. (b) Show that the n × n matrix A is orthogonal if and only if its row vectors are orthonormal.

6.12If a1, . . . , an and b1, . . . , bn are two different orthonormal bases for ![]() n, show that there is an orthogonal transformation L:

n, show that there is an orthogonal transformation L: ![]() n →

n → ![]() n with L(ai) = bi for each i = 1, . . . , n. Hint: If A and B are the n × n matrices whose column vectors are a1, . . . , an and b1, . . . , bn, respectively, why is the matrix BA−1 orthogonal?

n with L(ai) = bi for each i = 1, . . . , n. Hint: If A and B are the n × n matrices whose column vectors are a1, . . . , an and b1, . . . , bn, respectively, why is the matrix BA−1 orthogonal?